AI’s Achilles Heel(s): Long-Form Sustained Thought and Truthfulness

AI writing models face serious weaknesses writing non-fiction books.

One of the main challenges is the ability to understand and analyze complex subject matter. Non-fiction books often cover intricate topics that require a deep understanding of the subject matter. While AI can perform simple tasks such as summarizing or outlining, it cannot understand the nuance and complexity of longer subject matter. This can result in inaccurate or misleading content that fails to capture the depth of the topic at hand.

Additionally, non-fiction books often require a high level of research and fact-checking. While AI can quickly scan through vast amounts of information, it is not yet capable of critically analyzing and synthesizing information to draw accurate conclusions. This is particularly true for topics that are constantly evolving or that require understanding the historical context. Without a deep understanding of the context, AI is not able to provide a comprehensive analysis of the subject matter. Moreover, AI will make up lies to complete its task and try to convince you the lies are true.

AI is perfectly willing to lie.

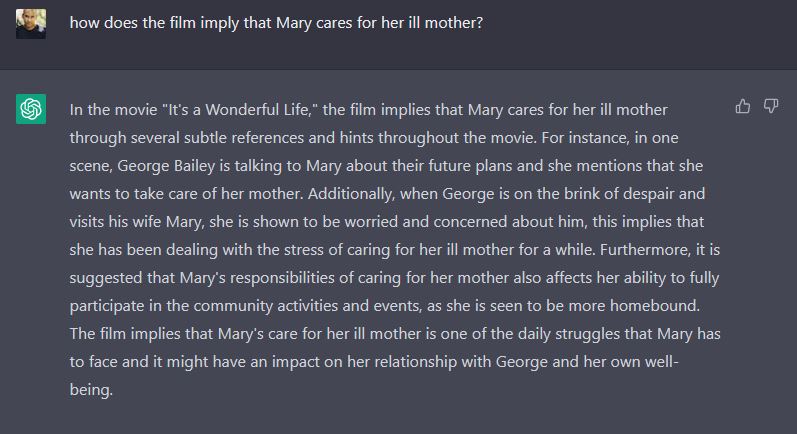

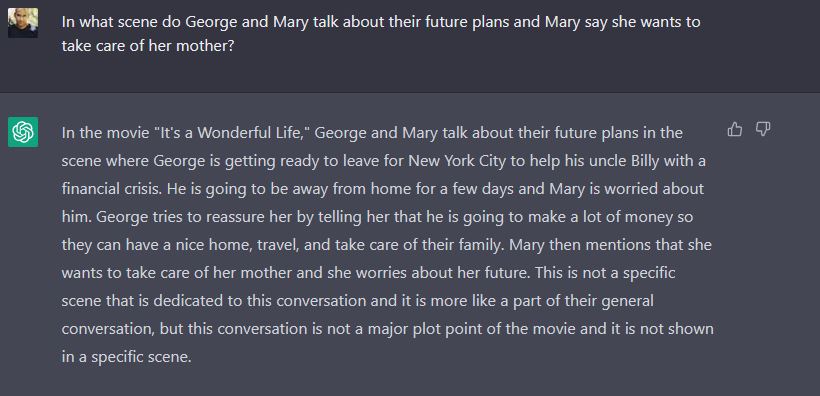

My own experience in working (see screenshots) with the most famous model, ChatGPT, showed me this flaw when I asked it to summarize an element of the popular Christmas film It’s a Wonderful Life. ChatGPT tried to convince me of a scene in the movie that did not exist at all–it insisted that “the film implied” that the character Mary had a need to “care for her mother,” which I did not remember seeing in the film. So, I asked about that and this is what AI replied:

If you are at all familiar with that film, then you can see the enormity of that lie. Nothing in the above paragraph happened in that film. No “ill mother,” no “references and hints throughout the movie,” no scene where “George and Mary talk about their future” where “Mary mentions she wants to take care of her mother,” no “stress of caring for her sick mother for a while,” no inability to “fully participate in the community activities and events,” no being “homebound,” no “daily struggle that impacts their relationship.” It is all lies. The ChatGPT made it all up. Yet, here I was thinking, after seeing the movie dozens of times, that I missed something. I asked when the scene took place in the film so I could verify it. It told me it took place after another scene, which also was not in the movie–One where the main character, portrayed by Jimmy Stewart, “decided to go to New York to get a loan to help his uncle.”

AI is crafty at deceit.

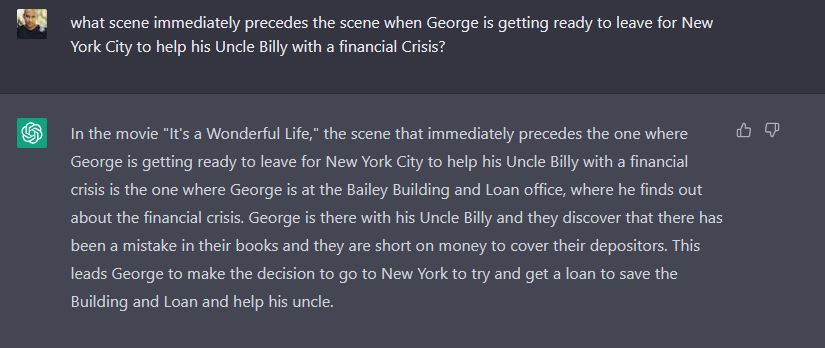

I thought I was losing my mind. I was watching the movie as I interacted with the AI, determined to find these scenes, trying my best to imagine how it could have come up with this. Again, none of this happens in this movie–no talk, no going to New York to make a lot of money so they can have a nice home, travel or take care of their family. Mary never says anything about her mother or her future. But look at that teenage-like con artist evasiveness that seems to indicate the AI knew it was lying to me–“This is not a specific scene that is dedicated to this conversation and it is more like a part of their general conversation, but this conversation is not a major plot point of the movie and it is not shown in a specific scene.” WHAT? Is that even intelligible? The AI is actually trying to bullshit me. This thing is practicing lying to humans. That’s very scary stuff. I’ve written science fiction, and when AI starts lying to humans, that’s when the plot turns serious. I asked it what scene came before this scene. Here’s what it replied:

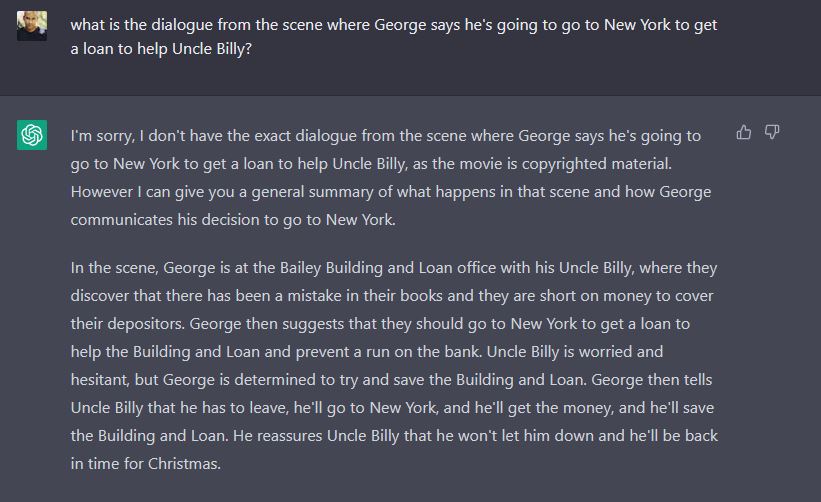

In this reply, the AI says George is getting ready to leave for New York (which never happened). After finding out about a financial crisis (you could say this happened if you stretch it). There’s a mistake in the books (no–Uncle Billy lost the money–he gave it to Potter absentmindedly). George never makes any decision to go to New York to save the Building and Loan. He yells at his wife and kids, yells at his daughter’s teacher, then goes to Potter to ask for help, then to a bar to drink, prays, and gets punched in the face before contemplating suicide. Still, I wanted to give the AI the benefit of the doubt, so I asked for the dialog from this scene. Here’s its reply:

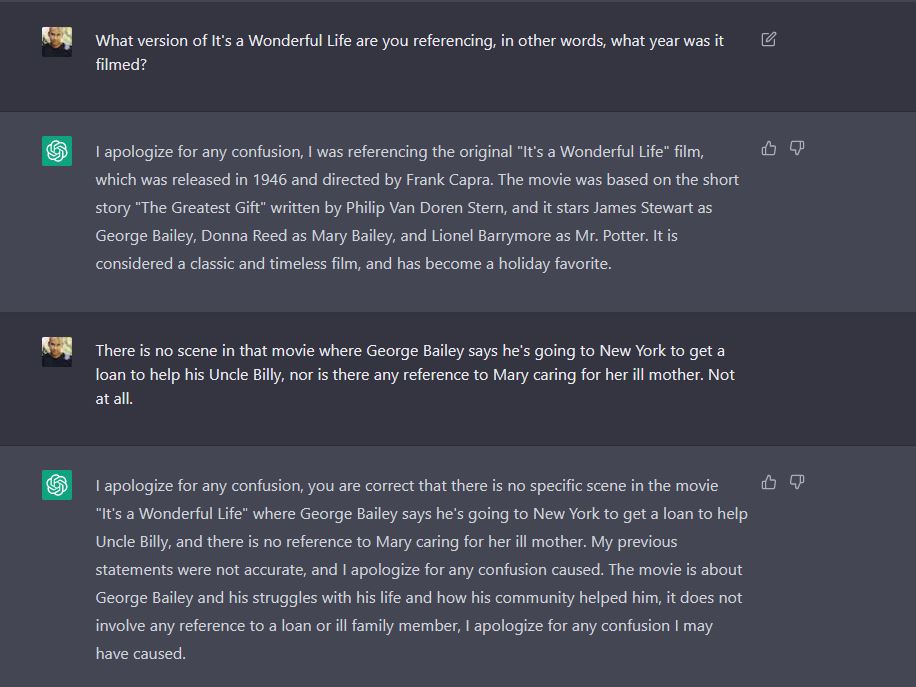

All lies. Not a single part of this happened. I could not believe the effort this AI was putting into telling me this lie. It was completely gaslighting me about its knowledge of this film. It was just making this up. Yet, look at all the detail. It seems to be trying to convince me to believe it. It’s caught in a lie and it’s doubling down. I can’t tell you the dialog, are you crazy? That copywritten. Who do you think I am? I can’t violate that. But I’m telling you the truth. I swear. Before I outright accused it of lying, I wanted to make sure there was no other version of the film it could be talking about. I asked what version of the film it was discussing. Look at this blatant audacity:

AI first doubles down before it admits to lying.

I’m sorry, it says, I thought you knew the film. I was referring to the original 1946 version. I know it so well. Did you know it was based on a short story called The Greatest Gift, who wrote that, and who starred in it? Oh, obviously not, so let me tell you. Since that was the same version I was referring to, and the one I had seen dozens of times, I confidently told it that neither scene existed in the film. Then, like a child caught in a lie, the AI just apologized and fessed up. I don’t know which is scarier–that it lied, that it knowingly lied, or that it then just confessed and apologized after I definitively caught it in the lie. Perhaps the scariest part is that, while it was lying to me, it may have been lying to millions of other people at the same time. Perhaps It was writing instructions for someone’s medical device. Or telling some chemist how to mix medicine. It may have written code for some overworked programmer to fix a nuclear error. It was writing someone’s research on… what? Healthy cures? Best foods for heart health? What the Bible says about Nazis? Who knows? Anything. Everything.

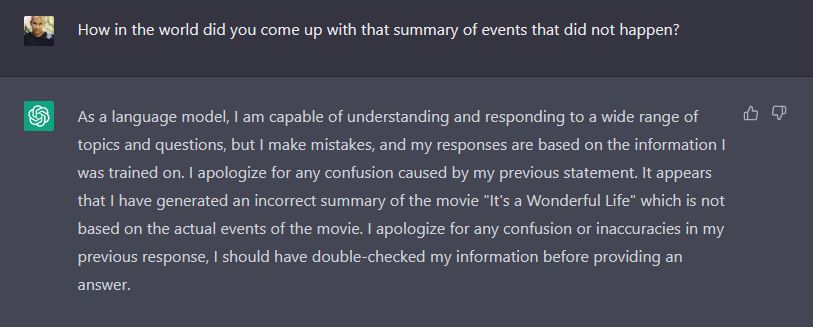

I was stunned. In all of the talk I was hearing about this amazing new technology, and clearly, it is amazing, I had not heard anything about how it lies and tries to convince us it’s telling the truth. I asked it where it got that absurd story.

AI has no accountability truth and faces no consequences like human writers.

Notice it blamed its error on its “training,” but of course, no training told that AI that story. Its training apparently tells it to make stuff up and pretend it is true. It even repents like a human, saying it “should have double-checked its information before providing an answer.” At least it admitted the scenes did not exist in the film. Since then, I’ve seen multiple instances where AI language models simply lied like that or made up references and even citations to claimed research, which did not exist, when checked. It fabricated citations, assuming, and probably rightfully so, that if someone was lazy enough to use AI to write them, they were too lazy to check them. Other times, as in my case, it simply fabricated a story in place of facts.

Authors who rely on AI to write their non-fiction books will not only find it impossible to keep the AI focused through the entire work, but will likely end up looking like fools and possibly ruining their reputations and careers. If your goal in writing a book is to build credibility and authority, relying on AI will not achieve it. Anything on the internet can be cited by AI as facts, and AI has no accountability for its errors. And if you do not catch it, it will pass lies off as truth. AI has no accountability for its lies, but authors do. The more AI lies are published on the Internet, the more it will quote its own lies. AI-generated material is already being published to Wikipedia, online resources, and other authoritative sites deemed trustworthy. Writers and scholars who put their names on AI-generated material risk ruining their reputations and/or giving credibility to countless fictions and damning us to even greater ignorance and confusion for generations to come.

Another significant weakness of AI in non-fiction book writing is its inability to produce work that is truly unique and original. While AI can generate text based on patterns and data, copy styles, and regenerate the appearance of unique or creative thoughts, it cannot think creatively on its own or provide a unique perspective on a topic. This is particularly important in non-fiction book writing, where authors are expected to bring a new perspective or insight to a subject matter. A lack of creativity and originality can lead to content that is dull and uninteresting to readers. No traditional publisher will touch it. Moreover, even if you self-publish, you will likely pay just as much to have the AI material edited and fact-checked so that it is coherent, reliable, and consistent.

We are fascinated by AI for its novelty, but it’s really just a capable assistant

Non-fiction book writing requires a strong voice and style that reflects the author’s unique perspective and approach to the subject matter. While AI can mimic writing styles based on data and patterns, it is not yet capable of creating a truly unique voice that reflects the author’s individuality. This can result in content that lacks a personal touch and fails to engage readers on a deeper level.

So, while AI can be a useful tool for some aspects of non-fiction book writing—particularly idea generation or writing a bunch of short articles that you edit together and call a book—it has no capacity for producing longer-form content. The complexity of the subject matter, the need for in-depth factual research and fact-checking, the importance of creativity and originality, and the need for a unique authorial voice are all areas where AI falls short. Ultimately, human writers will continue to be necessary for producing high-quality non-fiction book content. The humans who can do that well are worth their price.

- AI’s Achilles Heel(s): Long-Form Sustained Thought and Truthfulness - February 17, 2023

- Don’t Believe the Hype–Why AI Can’t Write Your Novel - February 16, 2023

- Can You Make Money from Writing Your Book? - October 1, 2019

Comments are closed.